When did I say it would be instant?I think you're overlooking the cost of installing all that tech in remote areas.

Or are we all going to be confined to cities and flatland?

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Took a Waymo (driverless car) this week

- Thread starter RussR

- Start date

When did I say that you said it would be instant?When did I say it would be instant?

Did you even look at the examples I provided?

...until the system is hacked and there are simultaneous mass casualties nationwide...you don't even need stop signs anymore, the cars can negotiate among themselves how to get past each other or wait for a blockage, etc. A lot of other things beyond what I've pointed out, but our level of tech is way beyond the capabilities needed to make that kind of system work. But it's got to be a closed system.

The biggest problem I see is decision making... the autonomous car is going to hit either the oncoming car approaching at a high closing rate, or the kid crossing the street, with no other option... which does the computer pick? For that matter, which does the human pick in that fraction of a second?

I would have thought they'd start first in express lanes of interstate highways, where you don't have crossing traffic or pedestrians... manually drive to the on ramp and engage the autopilot, and resume manual control when exiting.

Larry in TN

En-Route

That's not how a computer works. It has no morals. The autonomous car will attempt to avoid hitting everything.The biggest problem I see is decision making... the autonomous car is going to hit either the oncoming car approaching at a high closing rate, or the kid crossing the street, with no other option... which does the computer pick?

If you're ever in Nashville, look me up. We'll go for an autonomous drive.

ElPaso Pilot

En-Route

- Joined

- May 26, 2006

- Messages

- 2,510

- Display Name

Display name:

ElPaso Pilot

The autonomous car has no morals, but makes choices based on weighted risk / harm outcome rankings that are decided upon when creating the algorithms.That's not how a computer works. It has no morals. The autonomous car will attempt to avoid hitting everything.

If you're ever in Nashville, look me up. We'll go for an autonomous drive.

Those risk rankings are generated by real people; engineers, programmers and lawyers.

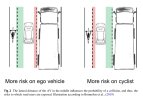

One example of predetermined risk weighting is below. The algorithm determines how much separation risk to allocate to the vehicle and owner from the heavy truck, vs. increasing risk of potential harm to the cyclist in the image below.

Additional protection that the vehicle gets allocated to mitigate truck path uncertainty increases injury risk of the cyclist and vice-versa.

The “computer” will have cases where it is not possible to avoid a collision due to rapid movement of either, or an additional party, and by default will be making pre-weighted choices.

I find this to be fascinating, as people in another room far, far away are pre-determining the final destiny of others by simply adjusting a risk weight factor.

The modern version of the old “Trolley Problem”.

Sounds like pre-programmed decision trees rather than actual intelligence.The autonomous car has no morals, but makes choices based on weighted risk / harm outcome rankings that are decided upon when creating the algorithms.

Those risk rankings are generated by real people; engineers, programmers and lawyers.

One example of predetermined risk weighting is below. The algorithm determines how much separation risk to allocate to the vehicle and owner from the heavy truck, vs. increasing risk of potential harm to the cyclist in the image below.

Additional protection that the vehicle gets allocated to mitigate truck path uncertainty increases injury risk of the cyclist and vice-versa.

The “computer” will have cases where it is not possible to avoid a collision due to rapid movement of either, or an additional party, and by default will be making pre-weighted choices.

I find this to be fascinating, as people in another room far, far away are pre-determining the final destiny of others by simply adjusting a risk weight factor.

View attachment 127186

The modern version of the old “Trolley Problem”.

NoBShere

Pre-takeoff checklist

- Joined

- Oct 29, 2014

- Messages

- 388

- Display Name

Display name:

NoBShere

Waymo has information on their webpage about their approach to safety that may be interesting to some. https://waymo.com/safety/

Larry in TN

En-Route

What about when the programing is machine learning, not hand coded, like Tesla's FSD v12?Those risk rankings are generated by real people; engineers, programmers and lawyers.

Lindberg

Final Approach

Is there any evidence that they're worse? Human drivers are really bad.Is there enough data yet to show that the safety record of autonomous vehicles equals or exceeds that of manual drivers in all of the locations where vehicles are used?

Google Maps

Find local businesses, view maps and get driving directions in Google Maps.www.google.com

Google Maps

Find local businesses, view maps and get driving directions in Google Maps.www.google.com

Tolerance is lower for a machine killing a human.Is there any evidence that they're worse? Human drivers are really bad.

Lindberg

Final Approach

Apparently. Human drivers kill people tens of thousands of times a year, and everyone considers that normal. But until Tesla solves unsolvable philosophical riddles, nobody thinks we're ready for self-driving cars.Tolerance is lower for a machine killing a human.

ElPaso Pilot

En-Route

- Joined

- May 26, 2006

- Messages

- 2,510

- Display Name

Display name:

ElPaso Pilot

It's still there, but in a different way.What about when the programing is machine learning, not hand coded, like Tesla's FSD v12?

"Millions of videos", per Elon, have been used for network training.

Many of those needed to be sorted into "good" and "bad" behavior to set boundaries, as well as many "never do this" regarding traffic laws, etc. I'm sure their lawyers and safety analysts have a say, too.

You can't just dump all of the Tesla fleet video into a machine and have it autonomously decide how to drive based on what John Does happen to do in a car when he thinks no one is watching.

Larry in TN

En-Route

Sure, but none of those videos showed how to handle the hypothetical binary ethical decisions like whether to hit the kid or the car.Many of those needed to be sorted into "good" and "bad" behavior to set boundaries, as well as many "never do this" regarding traffic laws, etc.

I don't know. That's why I'm asking if anyone has seen comparative data.Is there any evidence that they're worse? Human drivers are really bad.

I'm also wondering whether enough driverless vehicle data has been collected to allow a quantitative comparison. For example, the latest data I've seen for driving in general in the U.S. shows 1.37 deaths per 100 million miles traveled. That means that driverless vehicles would need to log about that many miles before it would be possible to conclude that the driverless vehicle fatality record was equal to or better than human drivers.

Another issue is whether driverless vehicles have been tested in locations that might be especially challenging for them, like narrow mountain roads, or in snowy conditions. It was proposed above to kick human drivers off the roads, but under such a proposal, unless we're going to prohibit people from traveling to such places by car, the testing of driverless vehicles needs to include such areas. I don't know whether that is being done.

Last edited:

Let'sgoflying!

Touchdown! Greaser!

Do they know to stop, if there is a fender bender, to share insurance info?

How would that sharing take place?

There are lots of accidents where the driver drove poorly, and goes to jail.

Who goes to jail when one is involved in eg; vehicular manslaughter?

How would that sharing take place?

There are lots of accidents where the driver drove poorly, and goes to jail.

Who goes to jail when one is involved in eg; vehicular manslaughter?

- Joined

- Feb 28, 2013

- Messages

- 1,724

- Display Name

Display name:

Warlock

I wonder how long it would have blocked traffic if that had happened on a snowy road on the way to a ski area.I was in San Francisco this week and one broke down in front of the house…lit itself up with flashers and it’s ok pedestrian disco ball on the roof…blocked traffic for an hour and a half until a technician showed up.

Lindberg

Final Approach

On a screen? Audibly? Via calling the 800 number on the outside of the vehicle?Do they know to stop, if there is a fender bender, to share insurance info?

How would that sharing take place?

They can't drive drunk and they can't consciously ignore an unreasonable risk of causing someone's death, so unclear how one could commit manslaughter. Perhaps you could argue some negligence or recklessness by the company that made them though.There are lots of accidents where the driver drove poorly, and goes to jail.

Who goes to jail when one is involved in eg; vehicular manslaughter?

Lindberg

Final Approach

I like discussions like this because they're often good thought experiments. But identifying difficult corner cases doesn't mean an idea is unviable even if 100% of the problems can't be solved.

I just get grumpy when people talk about banning human drivers from the roads without thinking it through.I like discussions like this because they're often good thought experiments. But identifying difficult corner cases doesn't mean an idea is unviable even if 100% of the problems can't be solved.

Lindberg

Final Approach

It's entirely possible that there will be some roads in the future that are off limits to human drivers, like HOV lanes are restricted now. Autonomous vehicles that are talking to each other can drive much now efficiently than human-operated vehicles in certain circumstances. But it won't be everywhere. And not all restaurants will be Taco Bell.I just get grumpy when people talk about banning human drivers from the roads without thinking it through.

Flatiowa

Pre-takeoff checklist

- Joined

- Feb 21, 2023

- Messages

- 205

- Display Name

Display name:

Flatiowa

I thought they all become carls juniors..And not all restaurants will be Taco Bell

That certainly seems to be more the path we're on.I thought they all become carls juniors..

Daleandee

Final Approach

- Joined

- Mar 4, 2020

- Messages

- 6,878

- Display Name

Display name:

Dale Andee

In a few years driverless cars will be old news and you can go across town in a pilotless drone ...

Ehang 216-S

Certification info

Ehang 216-S

Certification info

NotIn a few years driverless cars will be old news and you can go across town in a pilotless drone ...

Ehang 216-S

Certification info

- Joined

- Jul 23, 2021

- Messages

- 3,888

- Display Name

Display name:

Albany Tom

I think this is the best question in the thread. It addresses an issue that's been around for a long time, that the software industry is almost immune from meaningful regulation or legislation. Well, I'll correct that, we DO have regulations protecting software companies, but not many the other way around.Do they know to stop, if there is a fender bender, to share insurance info?

How would that sharing take place?

There are lots of accidents where the driver drove poorly, and goes to jail.

Who goes to jail when one is involved in eg; vehicular manslaughter?

We pick on Boeing for not being able to build an airplane that doesn't have parts flying off of it, yet every major software vendor I'm aware of is in a continual cycle of release products with serious flaws. The problem is so pervasive that regulations are put in place in every industry that deals with confidential data that software has to be kept "current". Meaning that entire industries are built around the profit of continued release of bad products.

So if self-driving cars can fix the software industry, that would be great. If someone builds a car that is generally safer than the average driver, that's also great. But if that car drives into a group of firemen, then that needs to be a liability for that company. And if their processes to develop that software weren't adequate for the risk they were dealing with? Well, then somebody could be going to jail. That last part doesn't seem very likely in this country, but may be in others. Maybe this will drive us (accidental pun there) to a place where the software is open source and universal? In part I say that because I'm not sure how well the Bosch and Toyota versions of "we drive u" will play along with each other, and if we get Microstuff involved we're all going to die.

SkyChaser

Pattern Altitude

- Joined

- Mar 22, 2020

- Messages

- 2,292

- Display Name

Display name:

SkyChaser

I'm looking forward to the "if you want to avoid the upcoming collision, just watch this ad!" and the "if you would like to unlock the car, fill out this quick, five-question survey about whether or not you like ketchup with your fries!" pop-ups.

Let'sgoflying!

Touchdown! Greaser!

But it is ok to think about, talk about potential problems (even unlikely ones) and their solutions, yes?I like discussions like this because they're often good thought experiments. But identifying difficult corner cases doesn't mean an idea is unviable even if 100% of the problems can't be solved.

Zeldman

Touchdown! Greaser!

If a car with a driver is involved in an accident with a driverless car, who will the driver argue with.??

Let'sgoflying!

Touchdown! Greaser!

Ghery

Touchdown! Greaser!

- Joined

- Feb 25, 2005

- Messages

- 10,944

- Location

- Olympia, Washington

- Display Name

Display name:

Ghery Pettit

Waymo knows where stop signs are and, from what I have observed, comes to a full stop at each one, unlike human drivers. They also know the speed limit. I can see how some unusual situations would confuse them, but stop signs, stop lights, and speed limits are predictable. I've seen them around here for 4-5 years; first in the testing phase with safety drivers; then alone; and now carrying paying passengers. I haven't had the occasion to take one, but I have the app and might someday. I am actually more cautious of them as a pedestrian and bicyclist than I think I would be as a passenger.

But things like emergency vehicles, pedestrians, objects in the road, blocked lanes, etc., aren't.

View attachment 127114

Ny Ford Escape knows about speed limits. It does not know about those.

Thanks, that's a lot more than I knew when I got up this morning..!!

Me, too.

I just get grumpy when people talk about banning human drivers from the roads without thinking it through.

Welcome to the club.

We pick on Boeing for not being able to build an airplane that doesn't have parts flying off of it, yet every major software vendor I'm aware of is in a continual cycle of release products with serious flaws. The problem is so pervasive that regulations are put in place in every industry that deals with confidential data that software has to be kept "current". Meaning that entire industries are built around the profit of continued release of bad products.

This is not a new problem. I remember about 40 years ago when a shop in Silicon Valley that specialized in rebuilding MGs had a bumper sticker for sale (that I regret not buying) that said, "I'll have you know that the parts falling off this car are of the highest British quality!"

Lindberg

Final Approach

As I said in your quote. It does get comical though when the peanut gallery assumes the designers and engineers who have been working with these things on actual roads for years haven't thought of obvious things like ice or emergency vehicles.But it is ok to think about, talk about potential problems (even unlikely ones) and their solutions, yes?

Last edited:

I think many of us who live west of the Continental Divide know that roads that driverless cars would have trouble with are less of a "corner case" than some would have us believe.Welcome to the club.

I don't doubt that they have thought of those things. However I would like to know how far along they are in testing driverless cars on roads and conditions that are not uncommon from the Rockies on westAs I said in your quote. It does get comical though when the peanut gallery assumes the designers and engineers who have been working with these things on actual roads for years haven't thought of obvious things like ice or emergency vehicles.

Larry in TN

En-Route

What some videos from the https://www.youtube.com/@WholeMars YouTube channel. He shows driving around California with his Model S with FSD v12.I think many of us who live west of the Continental Divide know that roads that driverless cars would have trouble with are less of a "corner case" than some would have us believe.

Many of his drives are without any driver intervention and the Model S is not geofenced nor does it use any advanced sensors--just cameras.

Everskyward

Experimenter

- Joined

- Mar 19, 2005

- Messages

- 33,453

- Display Name

Display name:

Everskyward

I think driving around the congested parts of San Francisco would be more difficult that driving on a mountain road (I have done both). Many more unpredictable events in the City; double-parked vehicles and clueless or agressive pedestrians, bicyclists, motorcyclists (lane splitting is legal here), and drivers, to name a few. Waymo seems to have done ok. Not perfect, but OK. Human drivers are far from perfect.I think many of us who live west of the Continental Divide know that roads that driverless cars would have trouble with are less of a "corner case" than some would have us believe.

Shepherd

Final Approach

In Turkey, (while I was stationed there), if you hire a cab and it gets into an accident, the passenger pays for the damages and compensates the victim for any loss or injury. If you kill someone the passenger goes to jail.So, if a driverless car runs a stop sign, who gets the ticket.??

The reason: The cab wouldn't be there if you hadn't hired it, so it's your fault.

I was a witness to this while I was there.

- Joined

- Jul 23, 2021

- Messages

- 3,888

- Display Name

Display name:

Albany Tom

Our systems suck in so many ways, but there are a lot of places that are MUCH worse. Thanks for the reminder...

Daleandee

Final Approach

- Joined

- Mar 4, 2020

- Messages

- 6,878

- Display Name

Display name:

Dale Andee

I was there in the 80s and was surprised at the four door Chevy cabs from the 50s and 60s that were there:In Turkey, (while I was stationed there), if you hire a cab and it gets into an accident, the passenger pays for the damages and compensates the victim for any loss or injury. If you kill someone the passenger goes to jail.

The reason: The cab wouldn't be there if you hadn't hired it, so it's your fault.

I was a witness to this while I was there.

Old Turkish cabs